Decision trees and random forests

Decision Trees

Decision trees are pretty intuitive.

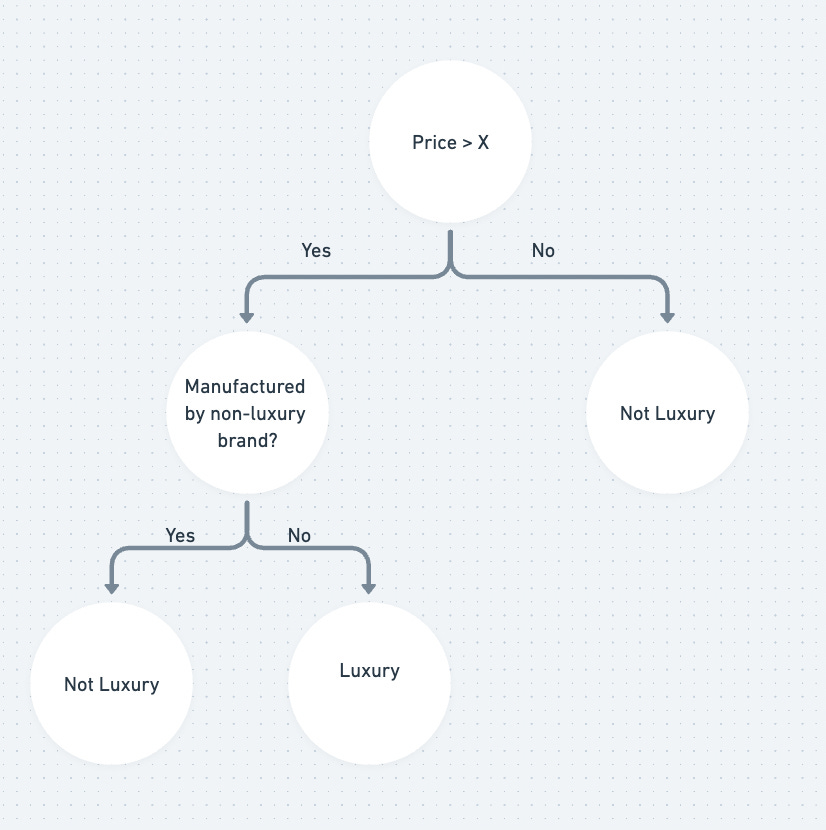

Suppose we have tabular data (rows and columns) that we’d like to put into categories. We start by taking a binary split of your data on the most important column. For example: if we’re categorising cars into luxury vs non-luxury, maybe we should start with the price?

This is the simplest decision tree. We can keep splitting down the nodes based on different columns to make the tree consider more columns. For example, perhaps there are expensive cars being sold by a manufacturer that’s not considered legacy. We could add a few nodes for that.

The key thing here is choosing the columns and values to split by correctly. In order to choose a good split, we should create a score metric and then just find the split that has the best score. There are a number of metrics that can be used.

scikit-learn has a decision tree class that does all this for us in a few lines of code. Creating a decision tree is as simple as:

from sklearn.tree import DecisionTreeClassifier

m = DecisionTreeClassifier(min_samples_leaf=25).fit(trn_xs, trn_ys)Random Forests

Random forests are groups of decision trees. In most cases, it’s hard to create good decision trees without overfitting on the training data. However, we can create a large number of decision trees and take their average. This is known as bagging.

Each tree is trained on a random subset of the original training data. The high-level idea is that if we take the average of a large number of predictions from unrelated models, the errors will average out to zero and the prediction will be closer to the true value.

It’s very easy to create random forests using scikit-learn.

from sklearn.ensemble import RandomForestClassifier

rf = RandomForestClassifier(100, min_samples_leaf=5)

rf.fit(trn_xs, trn_y);Both these models are relatively simple but still lead to good results many times. Another thing that decision trees or random forests can be helpful for is exploring our data. We can understand which features are more important and which less from the decision trees we create.

Obviously biased, but I have a soft spot for https://github.com/microsoft/LightGBM personally.